The UK’s medical system regulator has admitted it has issues about VC-backed AI chatbot maker Babylon Well being. It made the admission in a letter despatched to a clinician who’s been elevating the alarm about Babylon’s method towards affected person security and company governance since 2017.

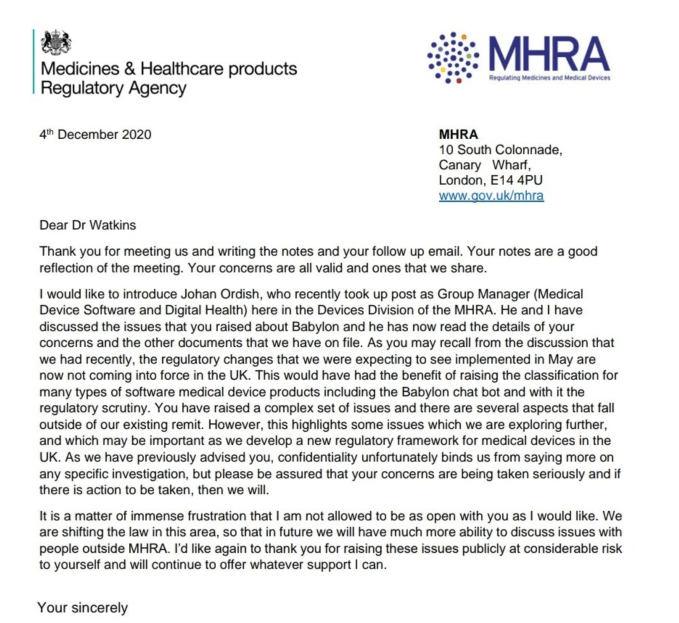

The HSJ reported on the MHRA’s letter to Dr David Watkins yesterday. TechCrunch has reviewed the letter (see under), which is dated December 4, 2020. We’ve additionally seen further context about what was mentioned in a gathering referenced within the letter, in addition to reviewing different correspondence between Watkins and the regulator through which he particulars a lot of wide-ranging issues.

In an interview he emphasised that the issues the regulator shares are “far broader” than the (necessary however) single problem of chatbot security.

“The problems relate to the company governance of the corporate — how they method security issues. How they method individuals who elevate security issues,” Watkins instructed TechCrunch. “That’s the priority. And a number of the ethics across the mis-promoting of medical units.

“The general story is that they did promote one thing that was dangerously flawed. They made deceptive claims close to how [the chatbot] must be used — its supposed use — with [Babylon CEO] Ali Parsa selling it as a ‘diagnostic’ system — which was by no means the case. The chatbot was by no means authorised for ‘prognosis’.”

“For my part, in 2018 the MHRA ought to have taken a a lot firmer stance with Babylon and made it clear to the general public that the claims that have been being made have been false — and that the expertise was not authorised to be used in the best way that Babylon have been selling it,” he went on. “That ought to have occurred and it didn’t occur as a result of the laws at the moment weren’t match for function.”

“In actuality there isn’t any regulatory ‘approval’ course of for these applied sciences and the laws doesn’t require an organization to behave ethically,” Watkins additionally instructed us. “We’re reliant on the healthtech sector behaving responsibly.”

The marketing consultant oncologist started elevating crimson flags about Babylon with UK healthcare regulators (CQC/MHRA) as early as February 2017 — initially over the “obvious absence of any sturdy medical testing or validation”, as he places it in correspondence to regulators. Nonetheless with Babylon opting to disclaim issues and go on the assault towards critics his issues mounted.

An admission by the medical units regulator that every one Watkins’ issues are “legitimate” and are “ones that we share” blows Babylon’s deflective PR techniques out of the water.

“Babylon can’t say that they’ve at all times adhered to the regulatory necessities — at occasions they haven’t adhered to the regulatory necessities. At completely different factors all through the event of their system,” Watkins additionally instructed us, including: “Babylon by no means took the protection issues as severely as they need to have. Therefore this problem has dragged on over a greater than three yr interval.”

Throughout this time the corporate has been steaming forward inking wide-ranging ‘digitization’ offers with healthcare suppliers all over the world — together with a 10-year deal agreed with the UK metropolis of Wolverhampton last year to offer an built-in app that’s supposed to have a attain of 300,000 individuals.

It additionally has a ten yr settlement with the federal government of Rwanda to help digitization of its well being system, together with by way of digitally enabled triage. Different markets it’s rolled into embody the US, Canada and Saudi Arabia.

Babylon says it now covers greater than 20 million sufferers and has completed 8 million consultations and “AI interactions” globally. However is it working to the excessive requirements individuals would anticipate of a medical system firm?

Security, moral and governance issues

In a written abstract, dated October 22, of a video name which occurred between Watkins and the UK medical units regulator on September 24 final yr, he summarizes what was mentioned within the following manner: “I talked by and expanded on every of the factors outlined within the doc, particularly; the deceptive claims, the harmful flaws and Babylon’s makes an attempt to disclaim/suppress the protection points.”

In his account of this assembly, Watkins goes on to report: “There seemed to be common settlement that Babylon’s company behaviour and governance fell under the requirements anticipated of a medical system/healthcare supplier.”

“I used to be knowledgeable that Babylon Well being wouldn’t be proven leniency (given their relationship with [UK health secretary] Matt Hancock),” he additionally notes within the abstract — a reference to Hancock being a publicly enthusiastic person of Babylon’s ‘GP at hand’ app (for which he was accused in 2018 of breaking the ministerial code).

In a separate doc, which Watkins compiled and despatched to the regulator final yr, he particulars 14 areas of concern — masking points together with the protection of the Babylon chatbot’s triage; “deceptive and conflicting” T&Cs — which he says contradict promotional claims it has made to hype the product; in addition to what he describes as a “multitude of moral and governance issues” — together with its aggressive response to anybody who raises issues in regards to the security and efficacy of its expertise.

This has included a public assault marketing campaign towards Watkins himself, which we reported on last year; in addition to what he lists within the doc as “authorized threats to keep away from scrutiny & opposed media protection”.

Right here he notes that Babylon’s response to security issues he had raised again in 2018 — which had been reported on by the HSJ — was additionally to go on the assault, with the corporate claiming then that “vested curiosity” have been spreading “false allegations” in an try to “see us fail”.

“The allegations weren’t false and it’s clear that Babylon selected to mislead the HSJ readership, opting to put sufferers vulnerable to hurt, with the intention to shield their very own repute,” writes Watkins in related commentary to the regulator.

He goes on to level out that, in Could 2018, the MHRA had itself independently notified Babylon Well being of two incidents associated to the protection of its chatbot (one involving missed signs of a coronary heart assault, one other missed signs of DVT) — but the corporate nonetheless went on to publicly garbage the HSJ’s report the next month (which was entitled: ‘Security regulators investigating issues about Babylon’s ‘chatbot”).

Wider governance and operational issues Watkins raises within the doc embody Babylon’s use of workers NDAs — which he argues results in a tradition inside the corporate the place workers really feel unable to talk out about any security issues they could have; and what he calls “insufficient medical system vigilance” (whereby he says the Babylon bot doesn’t routinely request suggestions on the affected person final result submit triage, arguing that: “The absence of any sturdy suggestions system important impairs the power to establish opposed outcomes”).

Re: unvarnished workers opinions, it’s fascinating to notice that Babylon’s Glassdoor rating on the time of writing is simply 2.9 stars — with solely a minority of reviewers saying they’d suggest the corporate to a pal and the place Parsa’s approval score as CEO can be solely 45% on combination. (“The expertise is outdated and flawed,” writes one Glassdoor reviewer who’s listed as a present Babylon Well being worker working as a medical ops affiliate in Vancouver, Canada — the place privateness regulators have an open investigation into its app. Among the many listed cons within the one-star overview is the declare that: “The well-being of sufferers shouldn’t be seen as a precedence. An actual joke to healthcare. Greatest to keep away from.”)

Per Watkins’ report of his on-line assembly with the MHRA, he says the regulator agreed NDAs are “problematic” and affect on the power of workers to talk up on issues of safety.

He additionally writes that it was acknowledged that Babylon workers might worry talking up due to authorized threats. His minutes additional file that: “Remark was made that the MHRA are in a position to look into issues which can be raised anonymously.”

Within the abstract of his issues about Babylon, Watkins additionally flags an occasion in 2018 which the corporate held in London to advertise its chatbot — throughout which he writes that it made a lot of “deceptive claims”, resembling that its AI generates well being recommendation that’s “on-par with top-rated working towards clinicians”.

The flashy claims led to a blitz of hyperbolic headlines in regards to the bot’s capabilities — serving to Babylon to generate hype at a time when it was prone to have been pitching traders to lift extra funding.

The London-based startup was valued at $2BN+ in 2019 when it raised an enormous $550M Sequence C spherical, from traders together with Saudi Arabia’s Public Funding Fund and a big (unnamed) U.S.-based medical health insurance firm, in addition to insurance coverage big Munich Re’s ERGO Fund — trumpeting the elevate on the time because the largest-ever in Europe or U.S. for digital well being supply.

“It must be famous that Babylon Well being have by no means withdrawn or tried to right the deceptive claims made on the AI Check Occasion [which generated press coverage it’s still using as a promotional tool on its website in certain jurisdictions],” Watkins writes to the regulator. “Therefore, there stays an ongoing danger that the general public will put undue religion in Babylon’s unvalidated medical system.”

In his abstract he additionally contains a number of items of nameless correspondence from a lot of individuals claiming to work (or have labored) at Babylon — which make a lot of further claims. “There’s large stress from traders to exhibit a return,” writes one in all these. “Something that slows that down is seen [a]s avoidable.”

“The allegations made towards Babylon Well being will not be false and have been raised in good religion within the pursuits of affected person security,” Watkins goes on to claim in his abstract to the regulator. “Babylon’s ‘repeated’ makes an attempt to actively discredit me as a person raises critical questions relating to their company tradition and trustworthiness as a healthcare supplier.”

In its letter to Watkins (screengrabbed under), the MHRA tells him: “Your issues are all legitimate and ones that we share”.

It goes on to thank him for personally and publicly elevating points “at appreciable danger to your self”.

Letter from the MHRA to Dr David Watkins (Screengrab: TechCrunch)

Babylon has been contacted for a response to the MHRA’s validation of Watkins’ issues. On the time of writing it had not responded to our request for remark.

The startup instructed the HSJ that it meets all of the native necessities of regulatory our bodies for the international locations it operates in, including: “Babylon is dedicated to upholding the best of requirements with regards to affected person security.”

In a single aforementioned aggressive incident last year, Babylon put out a press launch attacking Watkins as a ‘troll’ and searching for to discredit the work he was doing to spotlight issues of safety with the triage carried out by its chatbot.

It additionally claimed its expertise had been “NHS validated” as a “secure service 10 occasions”.

It’s not clear what validation course of Babylon was referring to there — and Watkins additionally flags and queries that declare in his correspondence with the MHRA, writing: “So far as I’m conscious, the Babylon chatbot has not been validated — through which case, their press launch is deceptive.”

The MHRA’s letter, in the meantime, makes it clear that the present regulatory regime within the UK for software-based medical system merchandise doesn’t adequately cowl software-powered ‘healthtech’ units, resembling Babylon’s chatbot.

Per Watkins there isn’t any approval course of, presently. Such units are merely registered with the MHRA — however there’s no authorized requirement that the regulator assess them and even obtain documentation associated to their growth. He says they exist independently — with the MHRA holding a register.

“You’ve gotten raised a fancy set of points and there are a number of elements that fall outdoors of our present remit,” the regulator concedes within the letter. “This highlights some points which we’re exploring additional, and which can be necessary as we develop a brand new regulatory framework for medical units within the UK.”

An replace to pan-EU medical units regulation — which is able to usher in new necessities for software-based medical units, and had been initially supposed to be applied within the UK in Could final yr — will now not happen, given the nation has left the bloc.

The UK is as an alternative within the means of formulating its personal regulatory replace for medical system guidelines. This implies there’s nonetheless a spot round software-based ‘healthtech’ — which isn’t anticipated to be totally plugged for a number of years. (Though Watkins notes there have been some tweaks to the regime, resembling a partial lifting of confidentiality necessities final yr.)

In a speech final yr, well being secretary Hancock told parliament that with the federal government aimed to formulate a regulatory system for medical units that’s “nimble sufficient” to maintain up with tech-fuelled developments resembling well being wearables and AI whereas “sustaining and enhancing affected person security”. It’s going to embody giving the MHRA “a brand new energy to open up to members of the general public any security issues a couple of system”, he mentioned then.

In the intervening time the present (outdated) regulatory regime seems to be persevering with to tie the regulator’s fingers — no less than vis-a-vis what they will say in public about security issues. It has taken Watkins making its letter to him public to do this.

Within the letter the MHRA writes that “confidentiality sadly binds us from saying extra on any particular investigation”, though it additionally tells him: “Please be assured that your issues are being taken severely and if there’s motion to be taken, then we are going to.”

“Based mostly on the wording of the letter, I feel it was clear that they needed to offer me with a message that we do hear you, that we perceive what you’re saying, we acknowledge the issues which you’re raised, however we’re restricted by what we are able to do,” Watkins instructed us.

He additionally mentioned he believes the regulator has engaged with Babylon over issues he’s raised these previous three years — noting the corporate has made a lot of adjustments after he had raised particular queries (resembling to its T&Cs which had initially mentioned it’s not a medial system however have been subsequently withdrawn and adjusted to acknowledge it’s; or claims it had made that the chatbot is “100% secure” which have been withdrawn — after an intervention by the Promoting Requirements Authority in that case).

The chatbot itself has additionally been tweaked to place much less emphasis on the prognosis as an final result and extra emphasis on the triage final result, per Watkins.

“They’ve taken a piecemeal method [to addressing safety issues with chatbot triage]. So I might flag a difficulty [publicly via Twitter] and they might solely take a look at that very particular problem. Sufferers of that age, enterprise that precise triage evaluation — ‘okay, we’ll repair that, we’ll repair that’ — and they might put in place a [specific fix]. However sadly, they by no means frolicked addressing the broader basic points throughout the system. Therefore, issues of safety would repeatedly crop up,” he mentioned, citing examples of a number of points with cardiac triages that he additionally raised with the regulator.

“After I spoke to the individuals who work at Babylon they used to must do these onerous fixes… All they’d must do is simply type of ‘dumb it down’ a bit. So, for instance, for anybody with chest ache it will instantly say go to A&E. They’d take away any thought course of to it,” he added. (It additionally in fact dangers losing healthcare assets — as he additionally factors out in remarks to the regulators.)

“That’s how they over time obtained round these points. But it surely highlights the challenges and difficulties in growing these instruments. It’s not simple. And in the event you try to do it rapidly and don’t give it sufficient consideration you then simply find yourself with one thing which is ineffective.”

Watkins additionally suspects the MHRA has been concerned in getting Babylon to take away sure items of hyperbolic promotional materials associated to the 2018 AI occasion from its web site.

In a single curious episode, additionally associated to the 2018 occasion, Babylon’s CEO demoed an AI-powered interface that appeared to indicate real-time transcription of a affected person’s phrases mixed with an ’emotion-scanning’ AI — which he mentioned scanned facial expressions in real-time to generate an evaluation of how the individual was feeling — with Parsa happening to inform the viewers: “That’s what we’ve completed. That’s what we’ve constructed. None of that is for present. All of this might be both available in the market or already available in the market.”

Nonetheless neither function has truly been delivered to market by Babylon as but. Requested about this final month, the startup instructed TechCrunch: “The emotion detection performance, seen in previous variations of our medical portal demo, was developed and constructed by Babylon‘s AI crew. Babylon conducts in depth person testing, which is why our expertise is regularly evolving to fulfill the wants of our sufferers and clinicians. After present process pre-market user-testing with our clinicians, we prioritised different AI-driven options in our medical portal over the emotion recognition operate, with a give attention to enhancing the operational elements of our service.”

“I actually discovered [the MHRA’s letter] very reassuring and I strongly suspect that the MHRA have been participating with Babylon to deal with issues which have been recognized over the previous three yr interval,” Watkins additionally instructed us as we speak. “The MHRA don’t seem to have been ignoring the problems however Babylon merely deny any issues and may sit behind the confidentiality clauses.”

In an announcement on the present regulatory scenario for software-based medical units within the UK, the MHRA instructed us:

The MHRA ensures that producers of medical units adjust to the Medical Units Laws 2002 (as amended). Please discuss with existing guidance.

The Medicines and Medical Units Act 2021 offers the inspiration for a brand new improved regulatory framework that’s presently being developed. It’s going to contemplate all elements of medical system regulation, together with the chance classification guidelines that apply to Software program as a Medical Gadget (SaMD).

The UK will proceed to recognise CE marked units till 1 July 2023. After this time, necessities for the UKCA Mark have to be met. This may embody the revised necessities of the brand new framework that’s presently being developed.

The Medicines and Medical Units Act 2021 permits the MHRA to undertake its regulatory actions with a larger degree of transparency and share data the place that’s within the pursuits of affected person security.

The regulator declined to be interviewed or response to questions in regards to the issues it says within the letter to Watkins that it shares about Babylon — telling us: “The MHRA investigates all issues however doesn’t touch upon particular person circumstances.”

“Affected person security is paramount and we are going to at all times examine the place there are issues about security, together with discussing these issues with people that report them,” it added.

Watkins raised yet one more salient level on the problem of affected person security for ‘innovative’ tech instruments — asking the place is the “actual life medical information”? Up to now, he says the research sufferers must go on are restricted assessments — typically made by the chatbot makers themselves.

“It’s one fairly telling factor about this sector is the truth that there’s little or no actual life information on the market,” he mentioned. “These chatbots have been round for few years now… And there’s been sufficient time to get actual life medical information and but it hasn’t appeared and also you simply marvel if, is that as a result of within the real-life setting they’re truly not fairly as helpful as we predict they’re?”