Be a part of Rework 2021 for a very powerful themes in enterprise AI & Knowledge. Learn more.

Synthetic intelligence will likely be key to serving to humanity journey to new frontiers and resolve issues that right this moment appear insurmountable. It enhances human experience, makes predictions extra correct, automates selections and processes, frees people to deal with greater worth work, and improves our total effectivity.

However public belief within the expertise is at a low level, and there may be good purpose for that. Over the previous a number of years, we’ve seen a number of examples of AI that makes unfair selections, or that doesn’t give any rationalization for its selections, or that may be hacked.

To get to reliable AI, organizations need to resolve these issues with investments on three fronts: First, they should nurture a tradition that adopts and scales AI safely. Second, they should create investigative instruments to see inside black field algorithms. And third, they want to verify their company technique contains robust knowledge governance ideas.

1. Nurturing the tradition

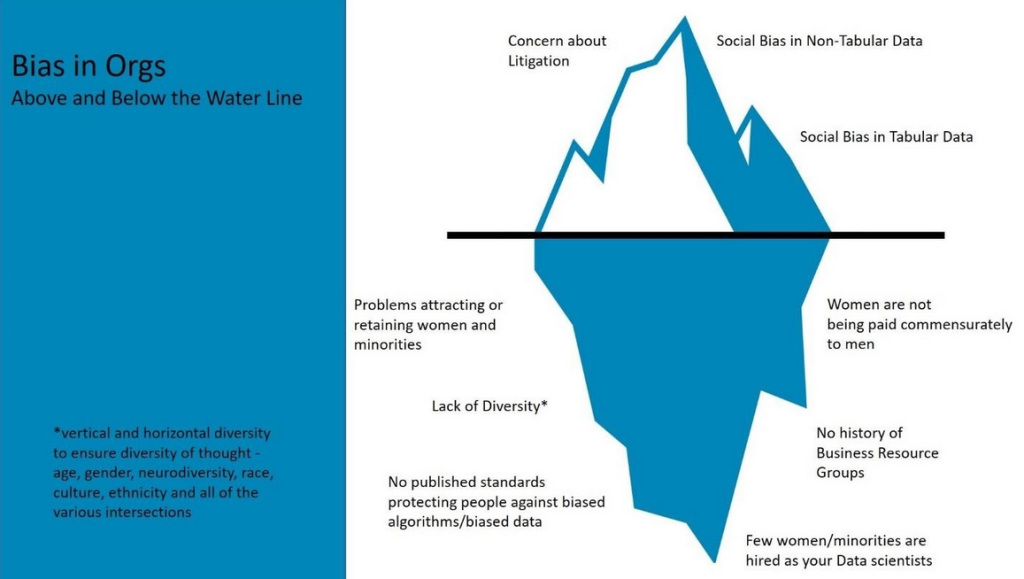

Reliable AI depends upon extra than simply the accountable design, improvement, and use of the expertise. It additionally depends upon having the appropriate organizational working constructions and tradition. For instance, many firms which will have issues about bias of their coaching knowledge even have expressed concern that their work environments aren’t conducive to nurturing ladies and minorities to their ranks. There’s certainly, a really direct correlation! To get began and actually take into consideration find out how to make this tradition shift, organizations have to outline what accountable AI seems to be like inside their perform, why it’s distinctive, and what the precise challenges are.

To make sure honest and clear AI, organizations should pull together task forces of stakeholders from completely different backgrounds and disciplines to design their method. This methodology will scale back the chance of underlying prejudice within the knowledge that’s used to create AI algorithms that would end in discrimination and different social penalties.

Process power members ought to embrace consultants and leaders from numerous domains who can perceive, anticipate, and mitigate related points as obligatory. They should have the assets to develop, take a look at, and shortly scale AI expertise.

For instance, machine studying fashions for credit score decisioning can exhibit gender bias, unfairly discriminating in opposition to feminine debtors if uncontrolled. A responsible-AI process power can roll out design thinking workshops to assist designers and builders assume via the unintended penalties of such an software and discover options. Design pondering is foundational to a socially accountable AI method.

To make sure this new pondering turns into ingrained within the firm tradition, all stakeholders from throughout a corporation — from knowledge scientists and CTOs to Chief Variety and Inclusivity officers should play a task. Combating bias and making certain equity is a socio-technological problem that’s solved when staff who might not be used to collaborating and dealing with one another begin doing so, particularly about knowledge and the impacts fashions can have on traditionally deprived folks.

2. Reliable instruments

Organizations ought to search out instruments to watch transparency, equity, explainability, privateness, and robustness of their AI fashions. These instruments can level groups to drawback areas in order that they’ll take corrective motion (resembling introducing equity standards within the mannequin coaching after which verifying the mannequin output).

Listed below are some examples of such investigative instruments:

There are variations of those instruments which can be freely accessible by way of open supply and others which can be commercially accessible. When selecting these instruments, it is very important first contemplate what you want the instrument to really do and whether or not you want the instrument to carry out on manufacturing programs or these nonetheless in improvement. You have to then decide what sort of help you want and at which worth, breadth, and depth. An necessary consideration is whether or not the instruments are trusted and referenced by world requirements boards.

3. Creating knowledge and AI governance

Any group deploying AI should have clear knowledge governance in impact. This contains constructing a governance construction (committees and charters, roles and tasks) in addition to creating insurance policies and procedures on knowledge and mannequin administration. With respect to people and automatic governance, organizations ought to undertake frameworks for wholesome dialog that assist craft knowledge coverage.

This as a possibility to advertise knowledge and AI literacy in a corporation. For extremely regulated industries, organizations can discover specialised tech companions that may additionally make sure that the mannequin danger administration framework meets supervisory requirements.

There are dozens of AI governance boards around the globe which can be working with business with a view to assist set requirements for AI. IEEE is one single instance. IEEE is the biggest technical skilled group devoted to advancing expertise for the advantage of humanity. The IEEE Requirements Affiliation, a globally acknowledged standards-setting physique inside IEEE, develops consensus requirements via an open course of that engages business and brings collectively a broad stakeholder neighborhood. Its work encourages technologists to prioritize moral issues within the creation of autonomous and clever applied sciences. Such worldwide requirements our bodies will help information your group to undertake requirements which can be best for you and your market.

Conclusion

Curious how your org ranks on the subject of AI-ready tradition, tooling, and governance? Evaluation instruments will help you identify how nicely ready your group is to scale AI ethically on these three fronts.

There isn’t a magic capsule to creating your group a really accountable steward of synthetic intelligence. AI is supposed to enhance and improve your present operations, and a deep studying mannequin can solely be as open-minded, numerous, and inclusive because the crew growing it.

Phaedra Boinodiris, FRSA, is an government guide on the Belief in AI crew at IBM and is at present pursuing her PhD in AI and Ethics. She has targeted on inclusion in expertise since 1999 and is a member of the Cognitive World Suppose Tank on enterprise AI.

VentureBeat

VentureBeat’s mission is to be a digital city sq. for technical decision-makers to achieve information about transformative expertise and transact.

Our website delivers important info on knowledge applied sciences and methods to information you as you lead your organizations. We invite you to grow to be a member of our neighborhood, to entry:

- up-to-date info on the topics of curiosity to you

- our newsletters

- gated thought-leader content material and discounted entry to our prized occasions, resembling Transform 2021: Learn More

- networking options, and extra