An enterprise ModelOps course of built-in with the rest of business IT is required to hurry up and scale AI options within the enterprise.

To maneuver ahead, we d like a business ModelOps course of and an open AI companies combination platform that industrializes AI development, release, operations, and governance.

Farooq: The large suppliers in a main or second cycle of development of an expertise or enterprise mannequin will all the time require to construct a moat and lock in enterprise purchasers. Farooq: We at Hypergiant imagine in an open ecosystem, and our go-to-market enterprise mannequin will depend on being on the crossway of enterprise usage and supplier options. The ultimate purpose is to make sure an open ecosystem, developer, and operator ease, and worth to enterprise purchasers in order that theyre able to speed up their business and income techniques by leveraging the really finest worth and the really finest breed of used sciences.

End up being a member.

After Amazons three-week re: Invent conference, corporations constructing AI functions might feel that AWS is the one leisure on the town. Amazon introduced improvements to SageMaker, its machine studying (ML) workflow service, and to Edge Supervisor– enhancing AWS ML capabilities on the sting at a time when serving the sting is considered more and more vital for enterprises. The corporate promoted huge consumers like Lyft and Intuit.

However Mohammed Farooq thinks theres a greater different to the Amazon hegemon: an open AI platform that doesnt have any hooks once again to the Amazon cloud. Till previously this 12 months, Farooq led IBMs Hybrid multi-cloud strategy, however he simply lately delegated hitch the business AI firm Hypergiant.

Here is our Q&A with Farooq, whos Hypergiants chair, worldwide chief knowledge officer, and normal supervisor of merchandise. He has pores and skin within the leisure and makes an interesting argument for open AI.

VentureBeat: With Amazons momentum, isnt it entertainment over for some other company wanting to be a huge service provider of AI business, or in any case for any competitor not named Google or Microsoft?.

Mohammed Farooq: On the one hand, for the last 3 to five-plus years, AWS has actually delivered excellent abilities with SageMaker (Autopilot, Information Wrangler) to enable accessible analytics and ML pipelines for nontechnical and technical clients. Enterprises have constructed strong-performing AI styles with these AWS capabilities.

Again, the enterprise production throughput of performing AI styles might be really low. The low throughput is an outcomes of the complexity of implementation and operations administration of AI styles inside consuming manufacturing functions which are working on AWS and different cloud/datacenter and software application program platforms.

Enterprises havent established an operations administration system– something referred to throughout the organization as ModelOps. ModelOps are needed and will have concerns like lifecycle procedures, greatest practices, and enterprise administration controls. These are important to develop the AI styles and details changes within the context of the underlying heterogeneous software application and facilities stacks presently in operation.

AWS does a steady task of automating an AI ModelOps course of throughout the AWS community. Working business ModelOps, in addition to DevOps and DataOps, will neednt solely AWS, nevertheless a number of different cloud, community, and edge architectures. AWS is great so far as it goes, however whats needed is seamless combination with enterprise ModelOps, hybrid/multi-cloud infrastructure structure, and IT operations administration system.

In the present day, profitable AI styles that ship worth and that enterprise leaders belief take 6-12 months to construct. A business ModelOps course of integrated with the remainder of enterprise IT is required to rush up and scale AI options within the business.

I may argue that were on the precipice of a brand name new period in artificial intelligence– one the place AI wont solely predict however recommend and take self-governing actions. However machines are however acting based mainly on AI styles which are inadequately experimented with and fail to meet laid out enterprise targets (crucial performance signs).

VentureBeat: So whats it that holds the business once again? Or asked for an unique way, whats that holds Amazon once again from doing this?

Farooq: To enhance growth and effectiveness of AI styles, I envision we ought to deal with three challenges which are slowing down the AI mannequin growth, implementation, and manufacturing administration within the enterprise. Amazon and different big gamers havent been capable of deal with these difficulties but. Theyre:.

AI info: That is the place the whole lot ends and begins in performant AI fashions. Purview is a direct shot to deal with the information problems of the enterprise information governance umbrella.

AI operations procedures: These are enabled for development and release within the cloud (AWS) and dont lengthen or hook up with the enterprise DevOps, DataOps, and ITOps processes. AIOps processes to deploy, operate, deal with, and govern have to be automated and built-in into enterprise IT processes. It will industrialize AI within the enterprise. It took DevOps 10 years to figure out CI/CD procedures and automation platforms. AI needs to leverage the personal belongings in CI/CD and overlay the AI mannequin lifecycle administration on high of it.

AI structure: Enterprises with native cloud and containers are speeding up on the trail to hybrid and multi-cloud architectures. AI structure need to work on dispersed architectures throughout hybrid and multi-cloud facilities and info environments.

To develop the following part of AI, which Im calling “industrialized AI within the enterprise,” we have to tackle all of those. Theyll entirely be met with an open AI platform that has an integrated operations administration system.

VentureBeat: Clarify what you imply by an “open” AI platform.

Farooq: An open AI platform for ModelOps lets business AI groups match and integrate needed AI stacks, info companies, AI instruments, and location AI fashions for different providers. Doing so will cause highly efficient business options at velocity and scale.

AWS, with all of its extremely efficient cloud, AI, and edge options, has actually nonetheless not stitched jointly a ModelOps that might industrialize AI and cloud. Enterprises in today day are making use of a mix of ServiceNow, legacy approaches administration, DevOps tooling, and containers to carry this jointly. AI operations supplies one other layer of intricacy to a currently more and more advanced mannequin.

An enterprise AI operations administration system ought to be the grasp management level and system of report, intelligence, and security for all AI choices in a federated mannequin (AI fashions and information catalogs). AWS, Azure, or Google can present details, course of, and tech platforms and companies to be consumed by enterprises.

Lock-in fashions, like these presently being provided, injured businesss means to establish core AI abilities. Corporations like Microsoft, Amazon, and Google are hampering our ways to construct superior options by establishing moats round their services. The trail to the best competence alternatives, within the service of each AI providers and consumers, is one the location option and openness is prized as a pathway to innovation.

You might have seen corporations articulate a distinguished creative and prescient for the method forward for AI. I envision theyre limited as a result of they dont seem to be going far sufficient to equalize AI entry and utilization with the present enterprise IT Ops and governance course of. To maneuver ahead, we d like a business ModelOps course of and an open AI companies integration platform that industrializes AI growth, implementation, operations, and governance.

With out these, enterprises will probably be pressured to choose on vertical options that stop working to integrate with business information proficiency architectures and IT operations administration techniques.

VentureBeat: Has any person tried to build this open AI platform?.

Farooq: Not most likely. To manage AI ModelOps, we d like an additional open and connected AI business ecosystem, and to arrive, we d like an AI business combination platform. This primarily symbolizes that we d like cloud provider operations administration integrated with business AI operations processes and a referral structure framework (led by CTO and IT operations).

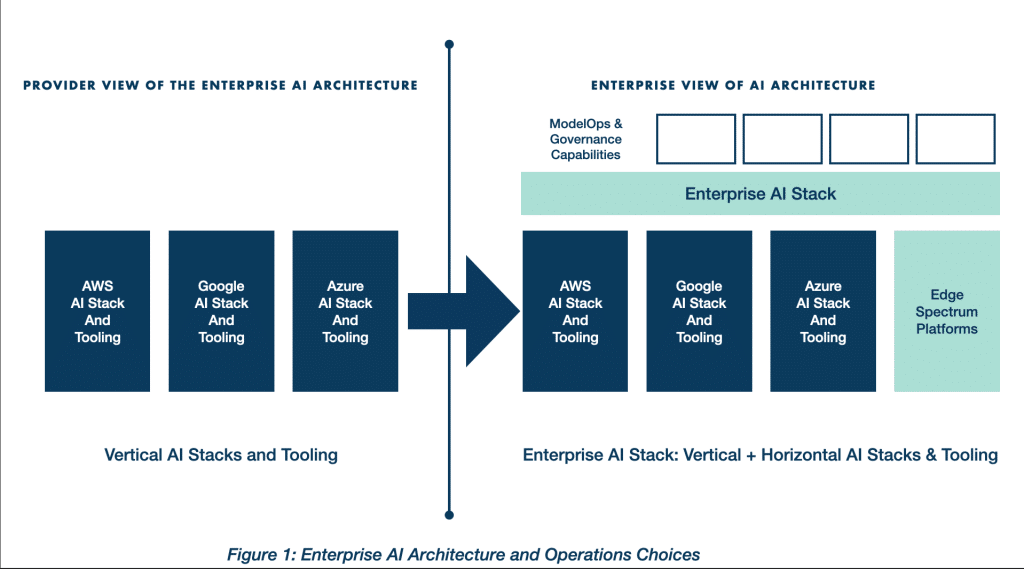

There are 2 choices for business CIOs, Designers, ctos, and ceos. One is vertical, and the opposite one is horizontal.

current data on the subjects of interest to you,.

our newsletters.

gated thought-leader content product and marked down entry to our treasured occasions, similar to Rework.

networking options, and additional.

Dataiku, Databricks, Snowflake, C3.AI, Palantir, and lots of others are building these horizontal AI stack options for the business. Their options function on high of AWS, Google, and Azure AI.

VentureBeat: So how is the prescient and creative of what youre building at Hypergiant entirely various to those efforts?

Farooq: The choice is evident: Weve to enable an enterprise AI stack, ModelOps tooling, and governance capabilities enabled by an open AI companies combination platform. It will function and integrate buyer ModelOps and governance processes internally that might work for every enterprise system and AI venture.

What we d like simply isnt one other AI company, however fairly an AI companies integrator and operator layer that improves how these corporations work collectively for business enterprise targets.

A buyer ought to have the capability to use Azure choices, MongoDB, and Amazon Aurora, relying on what a lot of closely fits their wants, worth factors, and future agenda. What this needs is a mesh layer for AI resolution providers.

VentureBeat: Are you able to extra summary this “mesh layer”? Is it so basic as plugging in your AI resolution on high, after which getting access to any cloud info supply below?

Farooq: The details mesh layer is the core part, not simply for performing the ModelOps procedures throughout cloud, 5g, and edge, however its likewise a core architectural part for constructing, working, and managing self-governing dispersed functions.

At the moment we have now cloud details lakes and details pipelines (batch or steaming) as an enter to practice and build AI styles. In manufacturing, info should be dynamically orchestrated throughout datacenters, cloud, edge, and 5g finish aspects. It will make certain that the AI fashions and the consuming apps constantly have the needed info feeds in making to execute.

AI/cloud home builders and ModelOps groups should have entry to details orchestration standards and protection APIs as a single interface to construct, function, and style AI choices throughout dispersed environments. This API ought to disguise the complexity of the underlying distributed environments (i.e., cloud, 5G, or edge).

As, we d like packaging and container specs that may help DevOps and ModelOps experts use the mobility of Kubernetes to quickly work and release AI choices at scale.

These details mesh APIs and product packaging used sciences have to be open sourced to ensure that we set up an open AI and cloud stack structure for business and never walled gardens from substantial providers.

By analogy, have a look at what Twilio has actually executed for communications: Twilio reinforced purchaser relationships throughout companies by incorporating lots of applied sciences in a single easy-to-manage user interface. Examples in various markets embody HubSpot in advertising and Squarespace for web site growth. These corporations work by offering infrastructure that streamlines the competence of the consumer throughout the instruments of numerous alternative corporations.

VentureBeat: When are you launching this?

Farooq: Were planning to launch a beta design of a primary action of that roadmap early subsequent 12 months [Q1/2020]

VentureBeat: AWS has a reseller coverage. May it might punish any mesh layer on the occasion that they required to?

Farooq: AWS may construct and provide their extremely own mesh layer thats connected to its cloud which user interfaces with 5G and edge platforms of its companions. However this will not assist its business potential customers speed up the occasion, release, and administration of AI and hybrid/multi-cloud options at velocity and scale. Collaborating with the opposite cloud and ISV providers, because it has actually carried out with Kubernetes (CNCF-led open supply venture), will profit AWS significantly.

As extra development on centralized cloud computing fashions have actually stalled (based mainly on present efficiency and incremental releases throughout AWS, Azure, and Google), the info mesh and edge native architectures is the location development might want to take place, and a distributed (declarative and runtime) information mesh structure is an exceptional location for AWS to lead the company and contribute.

The digital business would be the greatest beneficiary of a dispersed info mesh structure, and this might help industrialize AI and digital platforms quicker– thus developing new monetary options and in return additional invest in AWS and different cloud supplier used sciences.

VentureBeat: What affect would such a mesh-layer resolution have on the primary cloud corporations? I think of it might impact consumer choices on what underlying business to use. May that center mesh individual scale back pricing for sure packages, damaging advertising efforts by the cloud gamers themselves?.

Farooq: The info mesh layer will set off substantial innovation on the sting and 5G native (not cloud native) functions, middleware, and infra-architectures. It will drive the huge providers to reconsider their item roadmaps, structure patterns, go-to-market collaborations, financial investments, and options.

VentureBeat: If the cloud corporations see this coming, do you expect theyll be extra likely to navigate towards an open ecosystem extra rapidly and squelch you?.

Farooq: The big providers in a primary or second cycle of advancement of a competence or enterprise mannequin will all the time require to construct a moat and lock in enterprise buyers. For example, AWS by no ways accepted that hybrid or multi-cloud was desired. Within the second cycle of cloud adoption by VMWare buyers, VMWare began to evangelise an enterprise-outward hybrid cloud strategy linking to AWS, Azure, and Google.

AWS executes its API throughout AWS public and Outposts. Briefly, they got here round.

The identical will strike edge, 5G, and dispersed computing. Appropriate now, AWS, Google, and Azure are building their dispersed computing platforms. Nonetheless, the capability of the open supply group and the innovation speed is so great, the dispersed computing structure within the subsequent cycle and past must move to an open community.

VentureBeat: What about lock-in on the mesh-layer stage? If I choose to accompany Hypergiant so I can entry companies throughout clouds, after which a completing mesh individual emerges that offers higher expenses, how straightforward is it to maneuver?

Farooq: We at Hypergiant envision in an open environment, and our go-to-market business mannequin will depend on being on the crossway of business usage and supplier choices. We drive intake economics, not provider economics. It will need us to assist a number of details mesh used sciences and develop a product for interoperation with a single user interface to our purchasers. The supreme function is to make certain an open environment, designer, and operator ease, and worth to enterprise purchasers in order that theyre able to speed up their business and income approaches by leveraging the really finest worth and the best breed of used sciences. Were this from the point of view of the benefits to the business, not the supplier.VentureBeat.

VentureBeats mission is to be a digital townsquare for technical choice makers to understand details about transformative know-how and transact.

Our website provides important data on details used methods and sciences to information you as you lead your organizations. We invite you to become a member of our group, to entry:.